Trait judgments of faces using a task without word labels

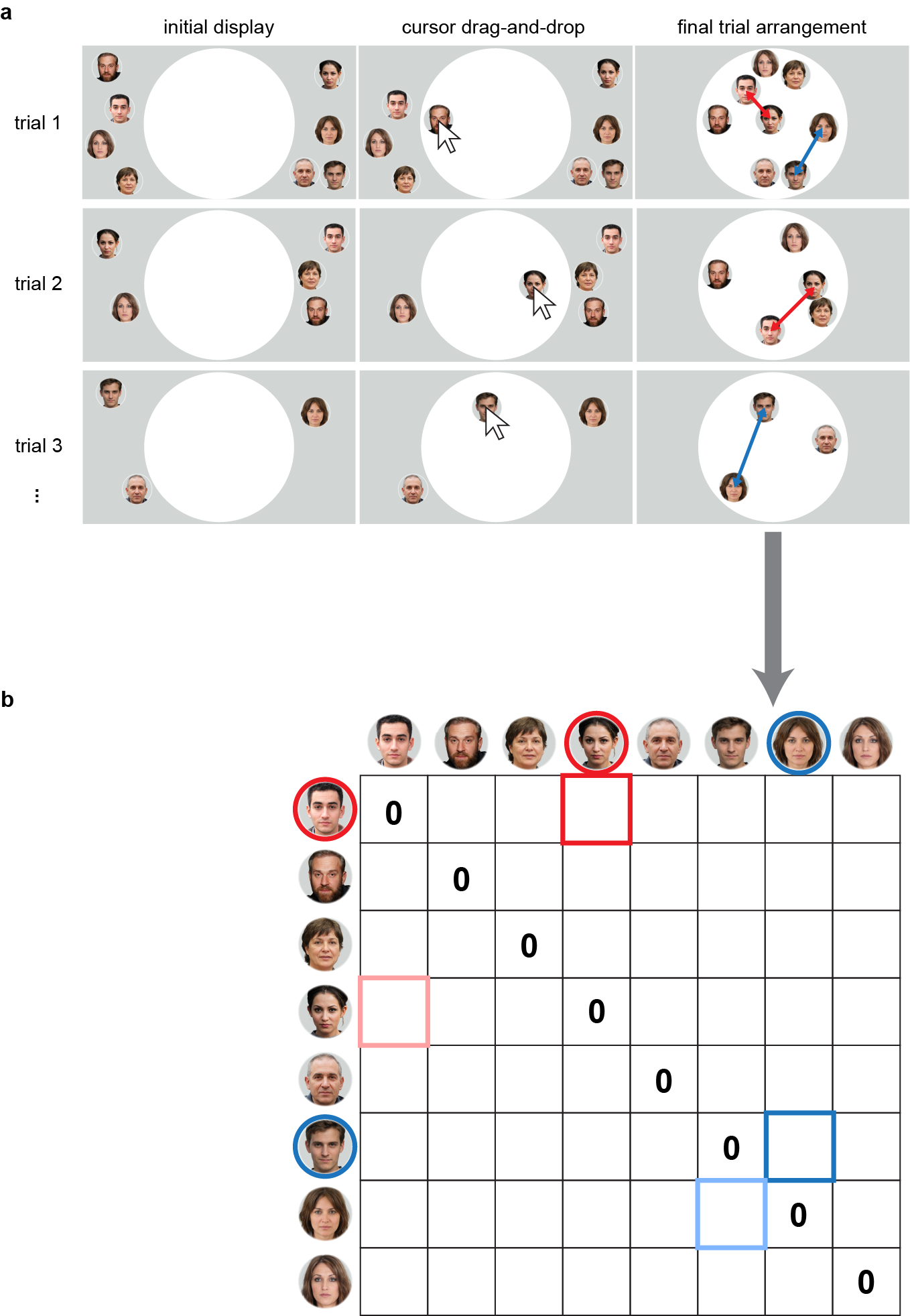

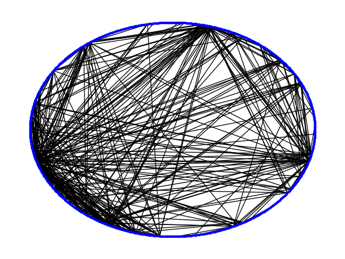

People reliably attribute psychological traits to faces. Prior research typically elicits these judgments using specific words provided by the experimenter (e.g., ratings on “competent,” “trustworthy”), raising questions about completeness (tasks use a limited set of words) and generalizability (e.g., across languages). In two experiments (Experiment 1 with predominantly White faces; Experiment 2 with White, Black, and Asian faces) we asked over 3,000 participants to spatially arrange unfamiliar faces based on perceived trait similarity, without providing any word labels. Experiment 1 yielded five dimensions we interpret as approachability, gender-specific warmth, competence, morality and youthfulness. Experiment 2 also produced five dimensions, which showed prominent effects of stereotypes associated with race. Comparisons with four other studies that used word labels argue that trait judgments are characterized by 2-5 dimensions, that approachability/warmth emerges as a universal evaluative factor, and that race and gender stereotypes emerge depending on the set of face stimuli used. (see preprint, data, and Github code).