25. Course-Prerequisite

Networks for Analyzing and Understanding Academic Curricula

P. Stavrinides and K.M. Zuev

Applied Network Science, vol. 8, article 19, April

2023.

[Web DOI

| Paper pdf | arXiv physics.soc-ph

2210.01269]

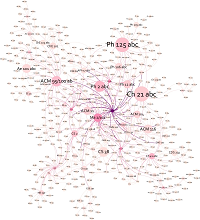

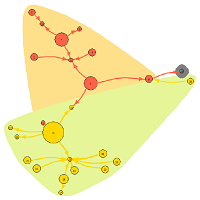

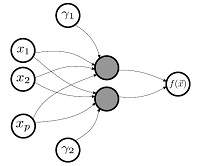

Abstract: Understanding a complex system

of relationships between courses is of great importance for

the university’s educational mission. This paper is

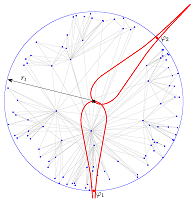

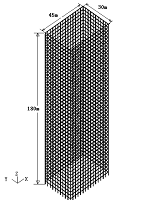

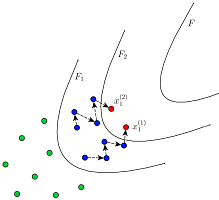

dedicated to the study of course-prerequisite networks (CPNs),

where nodes represent courses and directed links represent

the formal prerequisite relationships between them. The main

goal of CPNs is to model interactions between courses, represent

the flow of knowledge in academic curricula, and serve as

a key tool for visualizing, analyzing, and optimizing complex

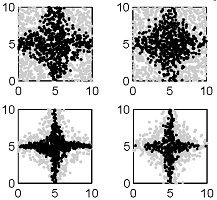

curricula. First, we consider several classical centrality

measures, discuss their meaning in the context of CPNs, and

use them for the identification of important courses. Next,

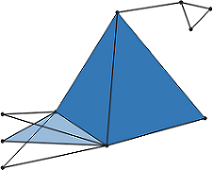

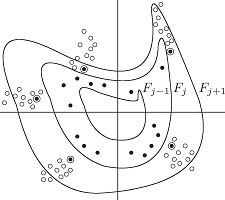

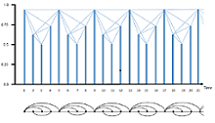

we describe the hierarchical structure of a CPN using the

topological stratification of the network. Finally, we perform

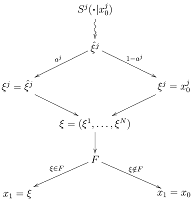

the interdependence analysis, which allows to quantify the

strength of knowledge flow between university divisions and

helps to identify the most intradependent, influential, and

interdisciplinary areas of study. We discuss how course-prerequisite

networks can be used by students, faculty, and administrators

for detecting important courses, improving existing and creating

new courses, navigating complex curricula, allocating teaching

resources, increasing interdisciplinary interactions between

departments, revamping curricula, and enhancing the overall

students’ learning experience. The proposed methodology

can be used for the analysis of any CPN, and it is illustrated

with a network of courses taught at the California Institute

of Technology. The network data analyzed in this paper is

publicly available in the GitHub repository.